Harnessing the Power of Neural Operators with Automatically Encoded Conservation Laws

Using the theory of differential forms to hard-code divergence-free constraints in neural operators

Quick Summary

If an image is worth a thousand words, in physics, a PDE is worth a thousand observations, or more. The authors of Conservation law-encoded Neural Operator (ClawNO) construct a Neural Operator whose architecture ensures that the law of conservation is followed to the t. For instance, they manage to learn the Navier-Stokes differential operator with only 10 observations (and approximatly 11 % of error, which is half the error of the next best competitor). The trick is to hardcode the divergence free condition as a skew matrix in the output of the their model. From other previous studies, the divergence free condition is closely tied to the conservation law and it can be expressed as a skewed matrix of derivatives. The ClawNO modifies the output of any neural operator to produce a skew matrix, and therefore the conservation law is satified by default. One might wonder, if we knwow that the divergence free should be satisfied, why not add it as a loss, in the manner of Physics Informed Neural Operator and check its performance. This question is not answered in the paper.

Context: A continuity equation states that the rate of change of a conserved quantity within a volume is equal to the net flux of that quantity through its boundary. In fluid mechanics, this is expressed as:

\[\frac{\partial \rho}{\partial t} + \nabla \cdot ( \rho \mathbf{u} ) = 0,\]where \(\rho\) is the density, and \(\mathbf{u}\) is the velocity field. Incompressibility implies constant \(\rho\), thus we have:

\[\nabla \cdot \mathbf{u} = 0.\]This condition \(\nabla \cdot \mathbf{u} = 0\) is often referred to as the divergence-free constraint.

Standard Neural Operators (NOs) learn mappings between function spaces purely from data, without explicitly enforcing conservation laws. As a result, they lack robustness in small-data regimes and may violate physical laws.

Proposed solution: The paper proposes ClawNO, a Neural Operator that automatically enforces conservation laws within its architecture, ensuring that the output of the model is divergence-free.

Unlike Physics-Informed Neural Operators (PINO) soft constraints, which are imposed through additional loss functions that do not guarantee their respect, ClawNO hard constraints ensure their enforcement, leading to more stable and data-efficient learning.

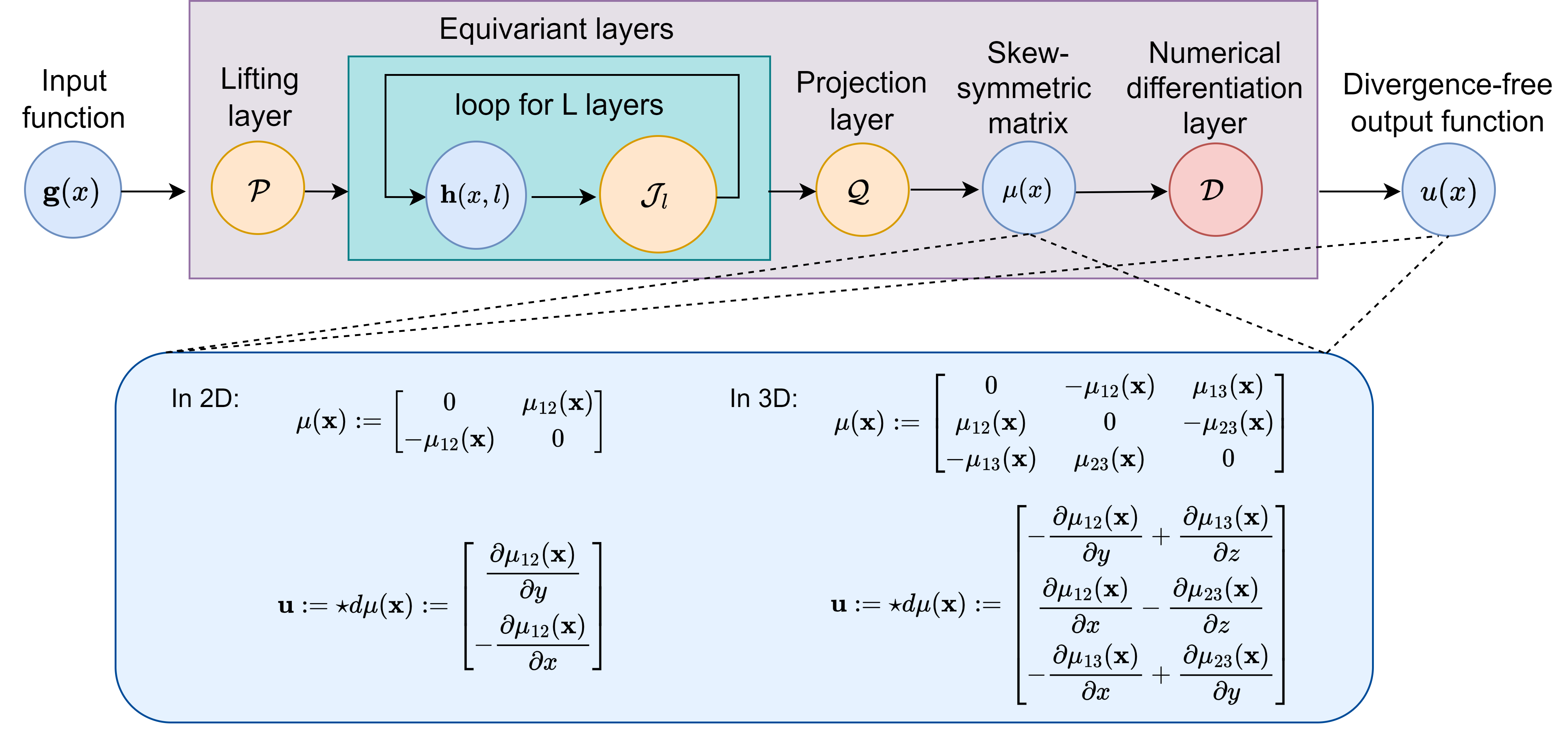

Instead of directly outputting the solution \(u\) after the projection layer \(Q\), ClawNO’s projection layer produces a latent vector used to construct a skew-symmetric matrix \(\mu\). The final output \(u\) is obtained by applying a weighted linear combination operator \(D\), which approximates the row-wise divergence operator. Because the divergence of a skew-symmetric matrix is theoretically divergence-free, the model enforces this constraint inherently.

For non-periodic domains, ClawNO leverages the Fourier Continuation (FC) technique to extend the output into a periodic function.

- Pros:

- Improves robustness in small-data regimes.

- Is model-agnostic: this approach could be applied to any neural operator architecture.

- Cons:

- May be computationally expensive due to the additional computations.

- The model was compared to standard FNO but not to PINO with conservation laws constraints, which could have provided a more fair comparison.

- Because of the approximations made by the divergence operator, the model may not be able to enforce an exact divergence-free field.