Self-Adaptive PINNs using a Soft Attention Mechanism

Additionnal learning of multiplicative soft attention masks to weight each training point individually

Context: PINNs may not fit the residual/boundary/initial conditions in “rapidly varying regions” depending on the PDE.

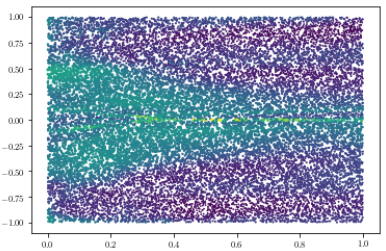

Proposed solution: The neural network learns which regions of the solution are difficult and is forced to focus on them. The self-adaptation weights specify a soft multiplicative soft attention mask, like the one used in computer vision. Each data point is associated with its self-adaptation weight. More formally, they are trainable, nonnegative self-adaptation weights for the initial \(\boldsymbol{\lambda}_0\), boundary \(\boldsymbol{\lambda}_b\), and residue points \(\boldsymbol{\lambda}_r\), respectively. The corresponding objective loss reads

$$ \begin{equation} \mathcal{L}\left(\boldsymbol{w}, \boldsymbol{\lambda}_r, \boldsymbol{\lambda}_b, \boldsymbol{\lambda}_0\right)=\mathcal{L}_s(\boldsymbol{w})+\mathcal{L}_r\left(\boldsymbol{w}, \boldsymbol{\lambda}_r\right)+\mathcal{L}_b\left(\boldsymbol{w}, \boldsymbol{\lambda}_b\right)+\mathcal{L}_0\left(\boldsymbol{w}, \boldsymbol{\lambda}_0\right) \end{equation} $$

Both the neural network parameters \(w\) and the self-adaptation weights are learned as follows

$$ \begin{equation} \min _{\boldsymbol{w}} \max _{\boldsymbol{\lambda}_r, \boldsymbol{\lambda}_b, \boldsymbol{\lambda}_0} \mathcal{L}\left(\boldsymbol{w}, \boldsymbol{\lambda}_r, \boldsymbol{\lambda}_b, \boldsymbol{\lambda}_0\right). \end{equation} $$

- Cons:

- 3 hyperparameters per training instance + one learning rate

- Max? A bit heuristic

- Need to always use the same colocation points

Proposed solution (SGD): extension to handle varying colocation points. The basic idea is to use a spatial-temporal predictor of the value of self-adaptive weights for the newly sampled points. Resort to a Gaussian process.

Other previously proposed solutions:

- Non-adaptive Weighting

: put premium in the loss for the initial - Learning Rate Annealing

: the weights are learning rate coefficients that change for each epoch according to statistics calculated using the data of the back-propagation - Neural Tangent Kernel Weighting

: based on the evolution of the eigenvalues - Mimimax Weighting

: update the weights during training using gradient descent for the network weights, and gradient ascent for the loss weights, seeking to find a saddle point in weight space.